"Bottom-up" proteomics

[The following is Part seven in a series of stories that highlight recent discoveries enabled by the Stampede supercomputer. Read parts one, two, three, four, five and six to find out how Stampede is making a difference through science and engineering.]

Tandem protein mass spectrometry is one of the most widely used methods in proteomics, the large-scale study of proteins, particularly their structures and functions.

Researchers in the Marcotte group at the University of Texas at Austin are using the Stampede supercomputer to develop and test computer algorithms that let them more accurately and efficiently interpret proteomics mass spectrometry data.

The researchers are midway through a project that analyzes the largest animal proteomics dataset ever collected (data equivalent to roughly half of all currently existing shotgun proteomics data in the public domain). These samples span protein extracts from a wide variety of tissues and cell types sampled across the animal tree of life.

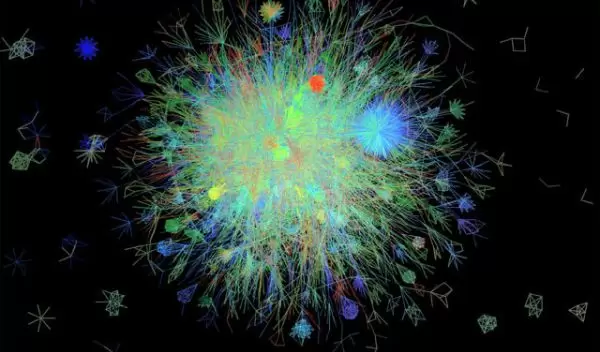

The analyses consume considerable computing cycles and require the use of Stampede's large memory nodes, but they allow the group to reconstruct the 'wiring diagrams' of cells by learning how all of the proteins encoded by a genome are associated into functional pathways, systems, and networks. Such models let scientists better define the functions of genes, and link genes to traits and diseases.

"Researchers would usually analyze these sorts of datasets one at a time," Edward Marcotte said. "TACC let us scale this to thousands."

Marcotte's work was featured in the New York Times in August 2012.