Brown Dog: A search engine for the other 99 percent (of data)

We've all experienced the frustration of trying to access information on websites, only to find that we can't open the files.

"The information age has made it easy for anyone to create and share vast amounts of digital data, including unstructured collections of images, video and audio as well as documents and spreadsheets," said Kenton McHenry, who along with Jong Lee lead the Image and Spatial Data Analysis division at the National Center for Supercomputing Application (NCSA). "But the ability to search and use the contents of digital data has become exponentially more difficult."

That's because digital data is often trapped in outdated, difficult-to-read file formats and because metadata--the critical data about the data, such as when and how and by whom it was produced--is nonexistent.

Led by McHenry, a team at NCSA is working to change that. Recipients in 2013 of a $10 million, five-year award from the National Science Foundation (NSF), the team is developing software that allows researchers to manage and make sense of vast amounts of digital scientific data that is currently trapped in outdated file formats.

The NCSA team, in partnership with faculty at the University of Illinois at Urbana-Champaign, Boston University and the University of Maryland, recently demonstrated two services to make the contents of uncurated data collections accessible.

The first service, the Data Access Proxy (DAP), transforms unreadable files into readable ones by linking together a series of computing and translational operations behind the scenes.

Similar to an Internet gateway, the configuration of the Data Access Proxy would be entered into a user's machine settings and then forgotten. From then on, data requests over HTTP would first be examined by the proxy to determine if the native file format is readable on the client device. If not, the DAP would be called in the background to convert the file into the best possible format readable by the client machine.

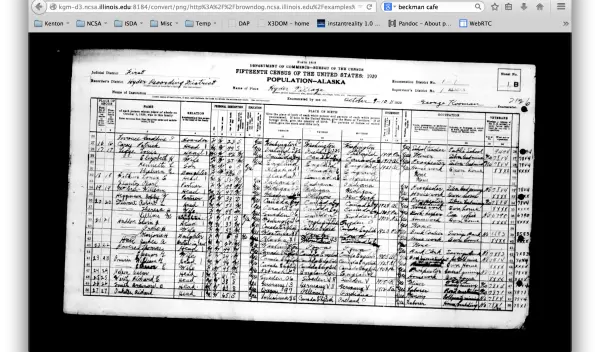

In a demonstration at the Brown Dog Early User Workshop in July 2014, McHenry showed off the tool's ability to turn obscure file formats into ones that are more easily viewable. [Watch a video of the demo.]

The second tool, the Data Tilling Service (DTS), lets individuals search collections of data, possibly using an existing file to discover other similar files in the data. Once the machine and browser settings are configured, a search field will be appended to the browser where example files can be dropped in by the user. Doing so triggers the DTS to search the contents of all the files on a given site that are similar to the one provided by the user.

For example, while browsing an online image collection, a user could drop an image of three people into the search field, and the DTS would return images in the collection that also contain three people. If the DTS encounters a file format it is unable to parse, it will use the Data Access Proxy to make the file accessible.

The Data Tilling Service will also perform general indexing of the data and extract and append metadata to files to give users a sense of the type of data they are encountering.

McHenry likens these two services to the Domain Name Service (DNS), which makes the Internet humanly navigable by translating domain names, like CNN.com, into the numerical IP addresses needed to locate computer devices and services and the information they provide.

"The two services we're developing are like a DNS for data, translating inaccessible uncurated data into information," he said. According to IDC, a research firm, up to 90 percent of big data is "dark," meaning the contents of such files cannot be easily accessed.

Rather than starting from scratch and constructing a single all-encompassing piece of software, the NCSA team is building on previous software development work. The project aims to potentially bring together every possible source of automated help already in existence. By patching together such components, they plan to make Brown Dog the "super mutt" of software.

This effort is in line with the Data Infrastructure Building Blocks (DIBBS) program at NSF, which supports the development of McHenry's software. DIBBS aims to improve data science by supporting the development of the tools, technologies and community knowledge required to rapidly advance the field.

"Brown Dog today is developing a 'time machine' set of cyberinfrastructure tools, software and services that respond to the long-standing aspiration of many scientific, research and educational communities to effectively access, share and apply digital data and information originating in diverse sources and legacy environments in order to advance contemporary science, research and education," said Robert Chadduck, the program director at NSF who oversees the award.

Projects supported by DIBBS involve collaborations between computer scientists and researchers in other fields. The initial collaborators for the Brown Dog software were researchers in geoscience, biology, engineering and social science.

Brown Dog co-principal investigator Praveen Kumar, a professor in the department of Civil and Environmental Engineering at the University of Illinois at Urbana-Champaign and the director of the NSF-supported Critical Zone Observatory for Intensively Managed Landscapes is developing ways to exploit Brown Dog for the analysis of LIDAR data. He hopes to characterize landscape features for the study of human impact in the critical zone.

"These technologies will enable rapid investigation of large high-resolution datasets in conjunction with other data such as photographs and ground measurements for modeling and cross comparison across study sites," Kumar said.

McHenry is also a team member on a new DIBBS project that applies some of the insights from his work to data-driven discovery in materials science.

Brown Dog isn't only useful for searching the Deep Web, either. McHenry says the Brown Dog software suite could one day be used to help individuals manage their ever-growing collections of photos, videos and unstructured/uncurated data on the Web.

"Being at the University of Illinois and NCSA many of us strive to create something that will live on to have the broad impact that the NCSA Mosaic Web browser did," McHenry said, referring to the world's first Web browser, which was developed at NCSA. "It is our hope that Brown Dog will serve as the beginnings of yet another such indispensible component for the Internet of tomorrow."