Highly dexterous robot hand can operate in the dark — just like humans

Think about what you do with your hands when you're home at night pushing buttons on your TV's remote control, or at a restaurant using all kinds of cutlery and glassware. These skills are all based on touch, while you're watching a TV program or choosing something from the menu. Our hands and fingers are incredibly skilled mechanisms, and highly sensitive to boot.

Robotics researchers have long been trying to create "true" dexterity in robot hands, but the goal has been frustratingly elusive. Robot grippers and suction cups can pick and place items, but more dexterous tasks such as assembly, insertion, reorientation and packaging have remained in the realm of human manipulation. However, spurred by advances in both sensing technology and machine-learning techniques to process the sensed data, the field of robotic manipulation is changing very rapidly.

Now U.S. National Science Foundation-supported researchers at Columbia Engineering have demonstrated a highly dexterous robot hand that combines an advanced sense of touch with motor learning algorithms, which allow a robot to learn new physical tasks via practice.

As a demonstration of skill, the team chose a difficult manipulation task: performing a large rotation of an unevenly shaped object grasped in the hand, while maintaining the object in a stable, secure hold. The task requires constant repositioning of some fingers while other fingers keep the object stable. The hand not only performed the task, but did so without visual feedback, based solely on touch sensing.

The fact that the hand does not rely on vision to manipulate objects means that it can do so in very difficult lighting conditions that would confuse vision-based algorithms — it can even operate in the dark.

"While our demonstration was on a proof-of-concept task, meant to illustrate the capabilities of the hand, we believe that this level of dexterity will open up entirely new applications for robotic manipulation in the real world," said mechanical engineer Matei Ciocarlie. "Some of the more immediate uses might be in logistics and material handling, helping ease up supply chain problems like the ones that have plagued our economy in recent years, and in advanced manufacturing and assembly in factories."

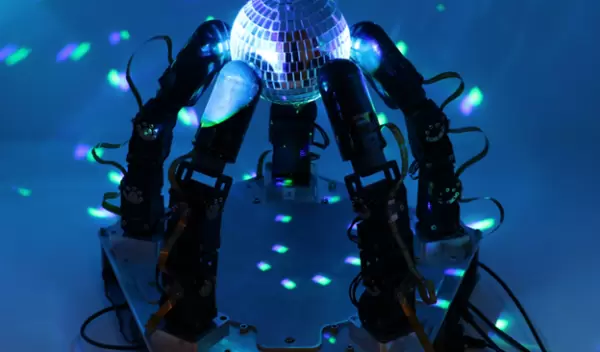

For this new work, led by Gagan Khandate, the researchers designed and built a robot hand with five fingers and 15 independently actuated joints. Each finger was equipped with the team's touch-sensing technology. The next step was to test the ability of the tactile hand to perform complex manipulation tasks. To do this, they used a new method for motor learning, called deep reinforcement learning, augmented with new algorithms that they developed for effective exploration of possible motor strategies.

The paper has been accepted for publication at the upcoming "Robotics: Science and Systems Conference" and is currently available as a preprint.

"This project, funded under NSF's Future Manufacturing Program, explores new possibilities in applying machine intelligence to allowing robots to execute manufacturing tasks independently, while adapting to changing conditions," said Bruce Kramer, a program director in NSF's Directorate for Engineering. "Autonomous robots learn from prior experience and call home for human assistance when their confidence level is low."