New algorithms improve prosthetics for upper limb amputees

Reaching for something on the top shelf in the grocery store or brushing one's teeth before bed are tasks many people can do without thinking. But doing these same tasks can require more mental effort for upper limb amputees using a prosthetic device.

NSF-funded researchers at Texas A&M University, led by Maryam Zahabi, are studying machine learning algorithms and computational models to provide insight into the mental demand placed on individuals using prosthetics. These models will improve the current interface in these prosthetic devices.

The researchers are studying prosthetics that use an electromyography-based human-machine interface. Electromyography is a technique that records the electrical activity in muscles. This electrical activity generates signals that trigger the interface, which translates them into a unique pattern of commands.

These commands allow the user to move the prosthetic device. But using such prosthetics can be mentally draining for upper limb amputees -- even for accomplishing simple, daily tasks.

"There are over 100,000 people with upper limb amputations in the United States," Zahabi said. "Currently there is very little guidance on which features in EMG-based human-machine interfaces are helpful in reducing the cognitive load of patients while performing different tasks."

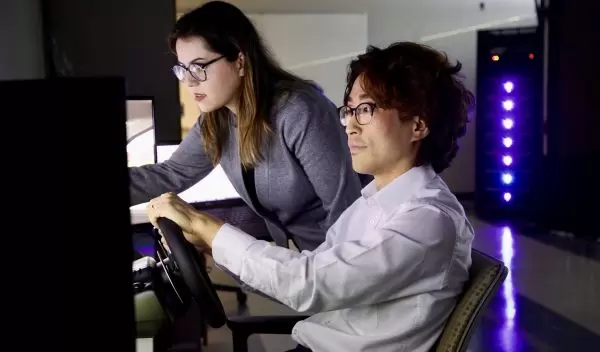

Testing different interface prototypes, through virtual reality and driving simulations, will allow researchers to provide guidance to the engineers creating these interfaces. This will lead to better prosthetics for amputees and other technological advances using EMG-based assistive human-machine interfaces.

Collaborating in the research with Texas A&M are North Carolina State University and the University of Florida.