Researchers use machine learning to find out which wildfires will burn out of control

Scientists at the University of California, Irvine have developed a new technique for predicting the final size of a wildfire from the moment of ignition.

Built around a machine learning algorithm, the model can help in forecasting whether a blaze is going to be small, medium or large by the time it has run its course –- knowledge useful to those in charge of allocating scarce firefighting resources. The researchers' work is highlighted in a study published in the International Journal of Wildland Fire.

"An analogy is what makes something go viral in social media," said lead author Shane Coffield. "We can think about what properties of a specific tweet or post might make it blow up and become really popular –- and how you might predict that at the moment it's posted or right before it's posted."

Coffield and his colleagues applied that idea to a hypothetical situation in which dozens of fires break out simultaneously. It sounds extreme, but this scenario has become all too common in recent years in parts of the western United States as climate change has resulted in hot and dry conditions that can put a region at high risk of ignition.

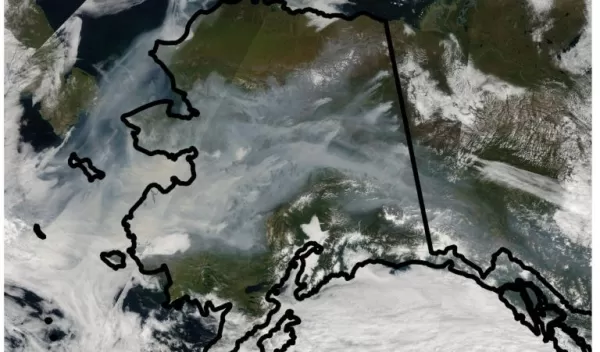

The team used Alaska as a study area for the project because the state has been plagued over the past decade by a rash of concurrent fires in its boreal forests, threatening human health and vulnerable ecosystems.

At the core of the scientists' model is a "decision tree" algorithm. By feeding the algorithm climate data and crucial details about atmospheric conditions and types of vegetation around the starting point of a fire, the researchers could predict the final size of a blaze 50 percent of the time.

The research is funded by NSF's Directorate for Computer and Information Science and Engineering.