Advances in Computational Research Transform Scientific Process and Discovery

Not every scientific discovery originates in the lab, or from the field.

Scientists increasingly are turning to powerful new computers to perform calculations they couldn't do with earlier generation machines, and at breathtaking speed, resulting in groundbreaking computational insights across a range of research fields.

"Science is conducted in a number of ways, including traditional pen and paper, deep thinking, theoretical studies and observational work--and then there is the computational side of things," says Michael Wiltberger, who studies space weather at the high altitude observatory of the National Science Foundation- (NSF) supported National Center for Atmospheric Research (NCAR). "Scientists use these computers to look at a set of equations they can't solve with a piece of paper, or a laptop.

"Science is now investing in these super computers, and they are where many new scientific discoveries are being made," he adds. "These computers are moving science out of the ivory tower and out into the real world, where it can have a direct impact on peoples' lives."

Last October, NSF inaugurated Yellowstone, one of the world's most powerful computers, based at NCAR in Cheyenne, Wyo., and later this month will dedicate two additional supercomputers, Blue Waters, located at the National Center for Supercomputing Applications at the University of Illinois at Urbana-Champaign, and Stampede, headquartered at the Texas Advanced Computing Center 9TACC) at The University of Texas at Austin.

NSF has provided more than $200 million in acquisition and deployment funding for the three systems (with the largest amount associated with Blue Waters). It is expected that over the life of the systems, an additional $200 million in operational costs will be funded by NSF.

The three systems will provide the nation's research community with unprecedented computational capabilities, further enhancing the already potent union between technology and the human mind, and offering the opportunity to better test and advance great scientific ideas. Each strengthens the other.

"The computer is excellent in permitting us to test a hypothesis," says Klaus Schulten, a professor of physics at the University of Illinois at Urbana-Champaign, who uses large-scale computing to study the molecular assembly of biological cells, most recently HIV, the virus that causes AIDS. "But if you want to test a hypothesis, you need to have a hypothesis."

By all measures, these computers, with their high speed and storage capacity, and the ability to produce high-resolution simulations, will have a significant impact on the pace of scientific progress.

They allow today's scientists to better understand the workings of the Earth and beyond, for example, by helping to trace the evolution of distant galaxies, by providing data that contribute to the design of new materials, by unraveling the complicated implications of climate change, and by supporting researchers trying to forecast tornadoes, hurricanes and other severe storms, and even space weather, such as solar eruptions.

They also improve scientists' ability to predict earthquakes, to model the flow of ice sheets in Antarctica in order to gauge future sea level rise, and to decipher the behavior of viruses and other microscopic life-forms, among other things. "The microscopes of today are not only made of glass and metal, but of software," Schulten says.

Alexie Kolpak, an assistant professor in the Department of Mechanical Engineering at the Massachusetts Institute of Technology, says that she almost certainly would not have begun her current work if she didn't have access to these computers. "I couldn't do what I do without them," she says, adding: "Well, I could--but it would take forever."

To be sure, most scientists don't have forever when it comes to solving many of the pressing scientific questions that currently confront society.

Kolpak is using quantum mechanics, that is, trying to design materials from the atomic scale up, to help solve energy problems, specifically the ability to capture carbon and convert it into useful industrial products. She is focusing on materials with ferroelectric properties, in combination with other substances. Ferroelectric materials have a spontaneous electric polarization that reverses when hit with external voltage. They can absorb carbon, which can then be turned into other compounds, such as cyclic carbonates--a class of chemicals used in plastics and as solvents--when the outside voltage is applied.

However, the problem with ferroelectric materials is that they don't work very well by themselves. This is why Kolpak is "testing" ferroelectric materials with other substances computationally to see how carbon reacts with the different combinations. Stampede allows her to do this.

"We start with an atomic structure and figure out where the atoms will go, and what structure they will form," she explains. "We can compute how strongly the material will bind CO2, and how quickly the CO2 will react. With these computer simulations, we can look at novel interesting combinations of materials as new solutions to the problem."

Wiltberger, at NCAR, is using Yellowstone to develop numerical space weather models to predict how and whether the magnetic field from a coronal mass ejection from the sun will affect the Earth. A magnetic field has the potential to seriously disrupt communications and navigation systems, as well as the power grid.

"We don't have a heck of a lot of lead time after we see that big ball of plasma coming from the sun," he says. "It takes about three days to reach the Earth, but currently we don't get data about the direction of the magnetic field until about 45 minutes before it hits Earth. We are trying to develop computer models that will spit out an answer faster than 45 minutes, so that we can provide a warning in a faster time frame."

In biology, the Blue Waters computer allowed Schulten to describe the capsid, or protein shell, of the HIV genome in minute detail, and its behavior, a computation that required the simulation of more than 60 million atoms. "It's a living, moving entity and looks very beautiful," he says. "The computer will tell us the physical properties and biological function."

The value of these new machines is their ability to produce high-resolution simulations with very fast calculations that take established data into account, along with the uncertainties, giving scientists a way to better span the range of possible scenarios.

"The machines are faster and we have better algorithms," says Thomas Jordan, director of the NSF-funded Southern California Earthquake Center, a community of more than 600 scientists, students and others at more than 60 institutions worldwide, headquartered at the University of Southern California. "We can be hit by many different types of earthquakes, and we have to be able to represent many possibilities. These computers allow us to do that for the first time."

Earthquake center researchers, for example, are using Stampede to develop an updated framework for comparing earthquake likelihoods throughout California, including the degree of ground shaking at specific locations such as downtown Los Angeles. The goal is to improve seismic safety engineering and building codes, set insurance rates and help communities prepare for future inevitable temblors. Ultimately, the U.S. Geological Survey will use these data to develop its seismic hazard maps.

"There are a lot of different types of information about earthquakes and where they might occur in future," Jordan says. "We use a complex set of information to develop models, including where the faults are and where earthquakes have occurred in past, how big they are and so forth. Our instrumental record only goes back less than 100 years, so we have to put together other types of information, including paleoseismic information, that is, looking for evidence of prehistoric earthquakes."

Eventually the researchers will develop an earthquake "rupture" forecast, "which tells you, for example, the probability of having large earthquake on the San Andreas fault in the next 50 years," Jordan explains. "Once you have this earthquake rupture forecast, you want to know how strong the shaking will be at certain sites, which requires additional calculations. You have to figure out how that earthquake will generate waves and propagate from the fault and cause shaking in cities around the fault. We call this a ground motion prediction model."

In order to do this, "we have to simulate many earthquakes, and this is where we really use a lot of computer time," he adds. "This is where Stampede and Blue Waters are important machines. We have to do hundreds of thousands of simulations of earthquakes."

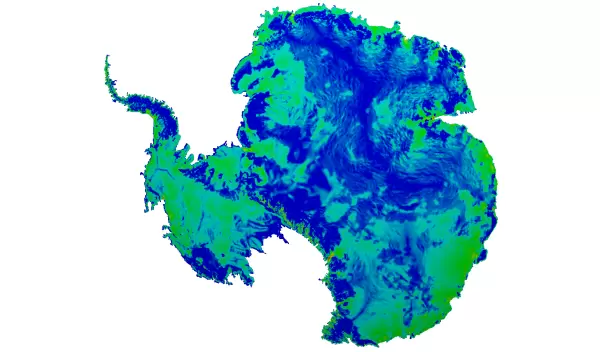

Similarly, Omar Ghattas, a professor of geological sciences and mechanical engineering at The University of Texas at Austin and director of the Institute for Computation Engineering & Sciences Center for Computational Geosciences, is leading a team that is trying to figure out the dynamics of ice sheets in Antarctica, and how it affects sea level rise. "As the climate warms, glaciologists have observed that the flow of ice into the ocean has been speeding up in West Antarctica. Why?" he says.

Ghattas tells Stampede what he knows--the topography of the land, for example, and the temperature at the top surface of the ice--and what he doesn't know--what the condition is under the ice at the base, known as "friction," which can impede ice flow.

"We ask the computer what the friction would have to be in order for the model to predict the flow velocity consistent with what we observe," he says. "We have to come up with very sophisticated mathematical algorithms to solve this so-called inverse problem. They find the appropriate values of the friction of the base along with the associated uncertainty.

"We need to solve the model many times, because the friction parameters are so uncertain," he adds. "We come up with possible scenarios, but to decide which is feasible, we compare them with past data and assign probabilities. Then we resubmit it to the computer to come up with a more likely scenario; in effect, we come up with likely scenarios of the past, then turn them around to make the model predictive of the future."

At Michigan State University, Brian O'Shea, an assistant professor of physics and astronomy, is simulating the formation and evolution of the Milky Way's most distant ancestors, small galaxies formed soon after the big bang.

With Blue Waters, he can see more than 1,000 times the mass resolution and 32 times the spatial resolution, compared to simulations from the late 1990s, giving him the ability to probe the universe at much smaller physical scales than previously possible.

"We are simulating how a bunch of galaxies form and evolve over the age of the universe," O'Shea says. "It's a kind of statistics game. We have observations of millions and millions of galaxies, and, in order to compare them, we need to simulate lots of galaxies at the same time, and many different sizes at once. What we want is a picture of how all the galaxies in the universe have evolved over time. We want to understand where the galaxies came from and how they form. We want to understand where we came from."

Brian Jewett, an atmospheric research scientist at the University of Illinois at Urbana-Champaign, is studying tornadoes as part of the team led by principal investigator and professor emeritus Bob Wilhelmson, with the goal of better understanding the factors that can lead to dangerous twisters.

Jewett says Blue Waters offers a way to change the physical processes of tornadoes, and produce simulations that show how tornadoes behave under a range of different weather conditions. "There are things you can do with computers that you can't easily do with other means of research," he says. "You can change how the atmosphere works in a computer through simulations, but you can't go outside and do it."

For example, when a storm cell merges with a "parent" storm, many different things can happen. Among them, it can destroy a storm or intensify it, possibly producing a potent tornado like the catastrophic twister that decimated Joplin, Mo., in 2011. In the computer, "we are making that merger not happen," Jewett says. "We will change the approaching storms so the merger doesn't occur. We want to see whether it will still be as destructive as it was, or whether it would've been weaker.

"We already know from storm chaser reports over decades that mergers can either kill off a storm, or result in one that is much stronger," he adds. "Understanding this and other factors that influence the merger could help in future prediction and warnings."

Cristiana Stan, an assistant professor in the Department of Atmospheric, Oceanic and Earth Sciences at George Mason University, is simulating a detailed representation of cloud processes to appreciate their role in climate change.

"Clouds play an important role in the formation of a variety of atmospheric phenomena, from simple rain showers to large events such as hurricanes," she says. "They have an impact on the amount of solar radiation that reaches the surface of the Earth, and the longwave radiation emitted by the Earth's surface, on the large-scale circulation of the atmosphere, and on the ocean-atmosphere interactions on a wide range of time scales."

Stan's challenge was to find a way to simulate clouds, which are small, in very high resolution, down to 1 kilometer. With the help of Blue Waters, she produced such a model.

"The way a cloud forms and disappears all depends on processes quite small in scale compared to the planet as a whole," says Jim Kinter director of George Mason's Center for Ocean-Land-Atmosphere Studies. Using these powerful computers, Stan "can now represent the way clouds truly work in the global climate. It takes the world's biggest computer to solve the world's climate system with clouds the way they are supposed to be."

Reflecting upon the rapid evolution of these new and powerful machines, computational scientists who use them believe the era of great science through supercomputing is just beginning.

"When I joined Carnegie Mellon in 1989, we had a supercomputer that was among the most powerful in the land," Ghattas says. "Today, the smartphone I hold in the palm of my hand is faster than that machine, which is remarkable. And Stampede is 20 times faster than the system it replaces, or 4 million times faster than that 1989 supercomputer. That's tremendous. It's hard to think of any field where the growth in the performance of the scientific instruments has been as great as in computational science. We keep pushing the boundaries of supercomputer performance because we need all that power, and more."