Video Game Technology and Science?

Researchers who design and simulate molecules are facing a technological traffic jam.

The bottleneck is caused by the amount of time--ranging from days to years--a computer needs to run complex mathematical equations, or algorithms, used by scientists and engineers to develop more effective drugs, catalysts for fuel cells and other molecular-based materials and applications.

Todd Martínez, a professor of chemistry at Stanford University, may just have the answer for breaking up this computational log jam.

In the March 2009, online issue of the Journal of Chemical Theory and Computation, Martínez and graduate student Ivan S. Ufimtsev, announced they had rewritten algorithms to run on a computer's graphical processing unit (GPU) rather than the central processing unit (CPU) of a traditional desktop computer.

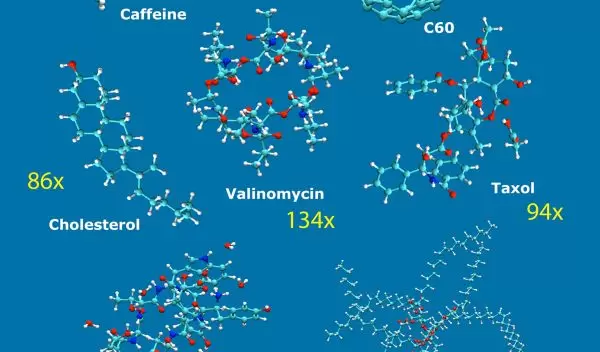

The revamped algorithms calculated the structures of test molecules up to 650 times faster than the molecular design program called GAMESS running on a computer's CPU (see top image).

A GPU is the core of a computer's video card and the technological driving force behind the ultra-fast graphics and realism of today's video games.

The researchers ran their algorithms on three different GPU systems each touting as many as 240 parallel processors and a novel "stream processing" architecture that allows the GPUs to perform as general-purpose processing units rather than being hard wired just to process graphics.

A GPU can process nearly a trillion operations per second and may be purchased for as little as $260. A CPU with four parallel processors and a slower processing speed of 50 billion operations per second costs around $1,000.

"Provided that compatible algorithms like the ones being developed by Martínez are available, and given the high performance and low price of these units, GPUs offer an alternative to using CPUs or competing for processor hours on supercomputers operated by national facilities," said Robert Kuczkowski, the National Science Foundation (NSF) program director who oversees this research.

Using their rewritten "Direct Self-Consistent-Field" algorithm running on one GPU, Martínez and Ufimtsev calculated the structures of seven benchmark, or test, molecules ranging in size from the petite 24-atom caffeine molecule to the burlier 453-atom olestra molecule (see top image). Olestra is an indigestible fat substitute used in manufactured goods.

The algorithm's remarkable speedup stems from its ability to very quickly process the first, and one of the most time consuming steps, in a "quantum" chemistry algorithm: predicting the 3D distribution of electrons, or pairs of electrons, in the different energy levels, or orbitals, surrounding the nucleus of atoms making up the molecule (see three top right images).

The exact locations of electrons cannot be pinned down due to their dual wave-like and particle properties.

The highly successful results obtained by running the redesigned quantum chemistry algorithm on one GPU "puts molecular design in reach as well as simulations of biologically and pharmaceutically important systems such as small proteins with unprecedented accuracy and speed," said Martínez.

Proteins are the cell's work horses and participate in virtually every cellular process ranging from metabolism to immune system responses.

Enabling new molecules to be theoretically designed and tested before their actual synthesis in the laboratory is another perk of the researchers' findings. "This new toolbox will speed up the exploration and prediction of new molecules with advanced properties and will reduce wasted effort spent on unproductive leads," notes Martínez.

Thom H. Dunning, Jr., lead chemist of the research project and director of NSF's National Center for Supercomputing Applications, and Martínez are keenly aware that computers of tomorrow will not be built using the technologies of today and thus the need for the chemistry community to develop chemical computations that could be run on future computers with astonishing processing speeds of one quadrillion, or 1015, operations per second--called a "petaflop."

"Martínez's research results fit exactly with our grant's goal of assessing the performance and limitations of computational applications, such as quantum chemistry algorithms, on the technologies expected to be the basis for the petascale and "exascale" computers of the future," said Dunning. A futuristic exascale computer will process operations at the mind-boggling speed of one quintillion, or 1018, operations per second.

Funding for this research is provided by the NSF Divisions of Chemistry and Materials Research.