NSF AI Institutes continue creating groundswell of innovation

With launch of third cohort, two established AI Institutes reflect on early results

Artificial intelligence models, such as Open AI's ChatGPT, have dominated the news cycle and captured the public's imagination. With Microsoft's Bing, Google's Bard, Spotify's DJ, and GitHub's Copilot, AI applications are being quickly adopted and advancing across industries and interfaces. But AI offers more than just better internet search results or personalized and curated online experiences.

AI models are profoundly accelerating scientific progress. Consider sharper images of black hole horizons, breakthroughs in hydrogen fusion, and safer self-driving vehicles. This acceleration is possible, in part, because of the U.S. National Science Foundation. In fact, the U.S. is leading the world in AI advancements because of investments made and sustained by NSF, the nation's primary non-defense federal funder of fundamental AI research.

The NSF-led National AI Research Institutes – or AI Institutes – program is the foundation's flagship program for use-inspired AI research, and it is the nation's largest AI research ecosystem funded through partnerships with other federal governments and industry leaders.

These Institutes focus on Administration and NSF priorities on AI and are paving the path for U.S. AI innovation and workforce development for decades to come. The program, a defining part of NSF Director Sethuraman Panchanathan’s tenure, launched with a cohort of seven Institutes in 2020, and 11 more were announced in 2021. In 2023, NSF announced seven new Institutes, bringing the total investment in these AI Institutes to $500 billion and a network of over 500 funded and collaborative institutions across the U.S. and around the world.

The topical themes, or tracks, covered by the AI Institutes span critical pillars of our societal and economic development like agriculture and food security, health care, weather forecasting, education, trustworthy AI, supply chain management, and cybersecurity, among other fields. Two AI Institutes from the 2020 cohort, the AI Institute for Foundations of Machine Learning and the AI Institute for Research on Trustworthy AI in Weather, Climate and Coastal Oceanography, are examples of how NSF investments are transforming AI in benefit to our nation.

Fundamentals of AI

The AI Institute for Foundations of Machine Learning, or IFML, focuses on core foundational challenges of machine learning, integrating mathematical tools with real-world objectives to advance state-of-the-art technologies and applications that are fair, ethical, precise and efficient. Led by The University of Texas at Austin, the AI Institute’s cross-disciplinary team develops new tools that advance precision medicine, enable more potent and stable vaccines, improve self-driving cars, and ensure equity and fairness in applications that rely on digital imaging.

A key challenge, for example, is to build new, efficient deep learning algorithms that account for constantly evolving data and incorporate changing contexts — in much the same way the human brain reacts and adjusts to new information in real time.

"Everyone who works in deep learning tries to understand how much data and computation is required for a model to generalize," said Adam Klivans, a computer science professor and director of IFML. "Finding algorithms to optimize these resources has never been a more important goal. Our research makes training and inference faster and more robust across broad classes of deep learning architectures, so that they can be used in a variety of applications."

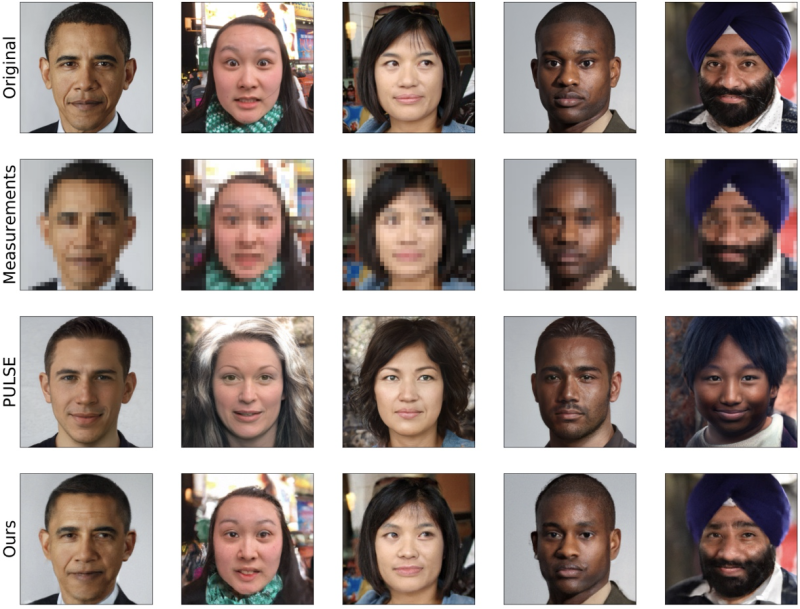

What results from IFML can we see in the real world? One example is denoising – taking blurry images and making them sharper. A major application of this technology is in healthcare. In particular, IFML works with clinicians to improve magnetic resonance imaging using deep generative models.

For denoising images, such as faces, one of the known issues was that systems in the past performed poorly on images of faces obtained from Black and brown people. Both biases in the data set and flaws in the reconstruction algorithm contributed to this problem, according to Klivans. "We have shown that using a smarter algorithm for denoising images mitigates bias in the resulting reconstructions and applying heuristics inspired by the underlying mathematics of diffusion results in improved sampling methods for generative models."

IFML advocates for more openness regarding the deployment of large foundation models such as CLIP, trained on an image and text dataset, and GPT4. IFML is collaborating with LAION, a non-profit working to make large-scale machine learning models, datasets and related code available to the general public, to create an open-source version of CLIP. “This gives academic researchers, and the public in general, the ability to better understand why extremely large models work so well,” Klivans said.

“It’s exciting to see machine learning tools being applied so broadly across the sciences,” Klivans said. “IFML continues to focus on algorithms, and we have several projects showcasing how impactful this research can be. As machine learning tools rely on even more sophisticated mathematical models, the connection between theoretical research and what we can demonstrate in practice has never been stronger.”

Improving trustworthy AI by developing AI for weather

Developing AI-powered innovations that people can trust and, therefore, use is also a key component of the work of the AI Institutes. The NSF AI Institute for Research on Trustworthy AI in Weather, Climate and Coastal Oceanography, or AI2ES, based at the University of Oklahoma, is developing models that improve the accuracy, reliability and clarity of AI techniques that underpin crucial weather models and predictions. AI2ES works directly with end-users to transform our understanding of trust in AI.

AI2ES brought together interdisciplinary researchers to develop AI-powered innovations for the weather and climate forecasting sector, according to Amy McGovern, a computer science and meteorology professor and the director of AI2ES. By bringing together AI developers, atmospheric and ocean scientists, and social scientists, AI2ES brings a unique lens to the goal of understanding trust in AI-based methods.

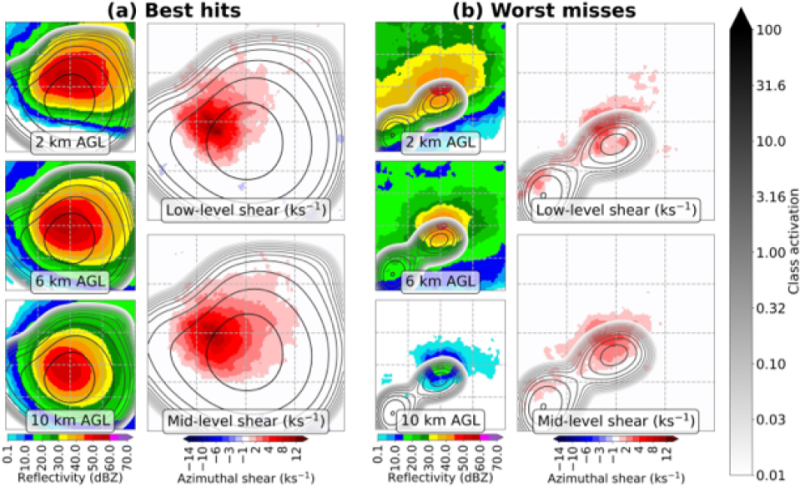

AI2ES is working on innovations that can more quicky forecast extreme weather events, such as tornadoes and hail. In the U.S., severe weather causes billions of dollars of damage annually. "With tornadoes, the average warning lead time is about 15 minutes. To improve this on days when forecasters are literally making life-or-death decisions, the AI that you are using must be really trustworthy in tense, tight situations," McGovern said. An improved severe weather prediction system based on AI2ES work is being tested by the National Oceanic and Atmospheric Administration.

The work at AI2ES is also helping to correct biases that exist in weather and climate forecasting. "Tornadoes and hail are reported where there are more people. If you look at a map of where hail is reported, you see highways and roads, but hail falls everywhere," McGovern said. AI2ES is developing techniques to identify and correct biases in the full AI development lifecycle. This will lead to more trustworthy AI.

Another of the AI2ES’s models is used to predict water temperatures in the Laguna Madre and other Texas shallow water bodies where endangered sea turtles and commercially and ecologically important fishes reside. During strong cold fronts, the water temperatures drop considerably and can render cold blooded sea turtles and fishes cold stunned or lethargic. AI predictions allow agencies to prepare for sea turtle rescues and facilitate the voluntary interruption of shipping traffic, which helps mitigate the impact of the events.

Working with local groups in the Corpus Christi, Texas, area, AI2ES was able to introduce a trustworthy AI based system that could forecast the onset and duration of potential cold-stunning events up to 120 hours in advance. The improved system was deployed each of the past three winters. During this past cold season, over 700 sea turtles were rescued, and more than 500 were rescued the prior season. February 2021 saw the largest recorded sea turtle cold stunning event in the US with over 13,000 affected. “The AI forecasting is working well, and we have a good partnership with agencies, NGOs and private sector partners who make decisions based on these forecasts,” McGovern said. “Part of trustworthy AI is building up relationships over time. Texas A&M Corpus Christi had been working on this for years, and we were able to improve their models.”

Educating future AI practitioners and researchers

As with all NSF-supported programs, education is a critical component of the AI Institutes, as training future generations of innovators is just as important as the development of AI itself. IFML has launched the first online master’s program in AI of its kind and is training graduate students to apply AI to fields such as finance, healthcare, and biotech. The effort spans multiple departments at the university as well as the Texas Advanced Computing Center to leverage NSF-powered supercomputing capabilities. The program likely would not exist without NSF support of the AI institute, Klivans said. IFML is also running AI education modules in high schools across Texas and hopes to expand nationally next year.

AI2ES is working with Del Mar College, a community college in Corpus Christi, to develop an AI certification program and is looking to expand the model to other community colleges. The first cohort just graduated. “We are reaching an audience we don’t typically reach, including high-school students doing concurrent enrollment and underrepresented groups. There is also a great amount of K-12 outreach with programs to teach kids about the excitement of STEM careers,” McGovern said.

Incorporating trustworthy AI across all scientific disciplines opens up possibilities that may not yet be imagined, according to McGovern. "With funding enabling interdisciplinary collaboration, we were able to create what NSF wanted, which is a whole that is greater than the sum of its parts. We're two-and-a-half years in, and I can say we're really doing convergence research. We are being seen as a worldwide leader in AI for weather." Within 10 years, "we could be using AI to identify new foundations in weather, ocean and climate science. The amount of data we have now is overwhelming for a human. With AI, we will be able to develop new hypotheses, innovative solutions, and create new areas of study not thought of today."