Why are supercomputers so important for COVID-19 research?

We spoke with NSF's Manish Parashar to find out

In the midst of the current pandemic, researchers across the country are turning to the most powerful high-performance computing resources available to gain a better understanding of COVID-19.

In order to study how the virus interacts with the human body on an atomic level, as well as how it spreads from one person to another, researchers are developing detailed models of the virus’ structure and transmission that rely on complex simulations involving large amounts of data.

To perform the necessary calculations, researchers are increasingly turning to some of the most powerful and uniquely capable computing facilities in the world like the NSF-funded Frontera supercomputer located at The University of Texas at Austin. As a leading supporter of academic computing research and infrastructure, NSF is playing an important role in facilitating much-needed COVID-19 research.

We talked with Manish Parashar, head of NSF’s Office of Advanced Cyberinfrastructure, to discuss how NSF is marshaling its computing resources to contribute to COVID-19 research.

Q: What role has NSF played in marshaling computing resources to aid COVID-19 research?

Parashar:

When considering all aspects of understanding and responding to the current pandemic, we at NSF immediately realized that computing and data were going to play very important roles. So, in early March, NSF took the lead in formulating a research response by issuing a Dear Colleague Letter, NSF 20-055 (since replaced by the updated NSF 20-052), which immediately made all of NSF’s computing resources accessible to the scientific community.

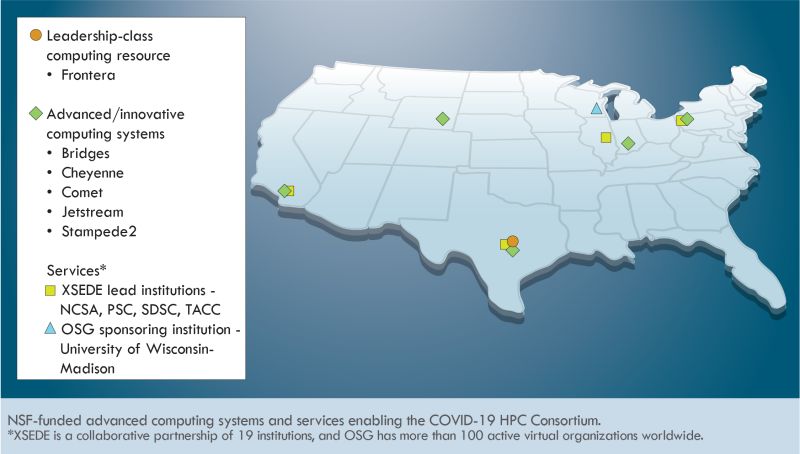

NSF also played a key role in the effort to combine the computing resources that we fund with those of other federal agencies, industry and academia to better facilitate COVID-19 research and the fight to stop the virus. Specifically, NSF co-led the establishment of the COVID-19 High Performance Computing (HPC) Consortium, a new public-private consortium announced by the White House in late March to offer free computing time to researchers on their world-class machines. The NSF-funded XSEDE project serves as the hub of this consortium, providing a portal and associated services to match researchers to resources.

In addition to dedicating NSF-supported advanced computing resources to the initiative, the agency provided significant expertise towards setting up the consortium. For example, a big part of launching the consortium was creating a portal where the community could find available computing resources and request access to them. Because the XSEDE project had already developed a similar system, we were able to share this expertise with the consortium. We brought the XSEDE team together and they basically built the portal for the consortium over a weekend in order to bring it online quickly. Since then, the XSEDE team has been leading these activities, including coordinating the evaluation of the technical merit of proposals coming in and matching those requests to the resource providers. They’ve been coordinating these activities with a team that crosses government, academia and industry to make important decisions in a timely manner so this crucial research can get underway.

Q: What NSF-funded computing resources are being enlisted for COVID-19 research through the COVID-19 HPC Consortium?

Parashar:

NSF is currently contributing around 84 petaflops and over 58,000 nodes to the consortium’s computing resources. What does that mean? Well, to match what NSF’s current contributions can perform in just one second, you would have to perform one calculation-per-second for 2,671,362,889 years.

We are dedicating a range of NSF-supported resources that involve a number of different organizations and institutions, including:

- -XSEDE

- -Pittsburgh Supercomputing Center

- -Texas Advanced Computing Center

- -San Diego Supercomputer Center

- -National Center for Supercomputing Applications

- -Indiana University Pervasive Technology Institute

- -Open Science Grid

- -National Center for Atmospheric Research

NSF brings probably the most diverse set of resources to this consortium. It includes our "leadership-class" resources such as Frontera, the most powerful computer located on a U.S. academic campus, but also includes resources that are more cloud-like. For example, resources that support data-intensive computations like Jetstream and those that are particularly suitable for artificial intelligence-driven applications such as Bridges and Comet, as well as computing grids dedicated to high-throughput computing such as the Open Science Grid. The goal is to provide a diversity of resources so researchers can find the right resource for the application they are looking at.

This is important because researchers use a wide set of approaches to get a complete understanding of the virus, and NSF wants to make sure they have the right tools to conduct their research. For example, researchers are working on computer models and simulations to better understand the virus’ structure. They need a very large system that can handle that type of research.

At the same time, researchers are also mining large amounts of data and applying AI techniques to large datasets. These applications require more cloud-based resources as well as a large memory capacity. That’s where resources like Bridges and Comet come into play. These allow you to do very deep data analytics that require large amounts of memory. We want to make sure we have the right type of resource to address the appropriate question that the scientist is asking.

Q: What are a few examples of COVID-19 research currently being facilitated by NSF-funded computing resources?

Parashar:

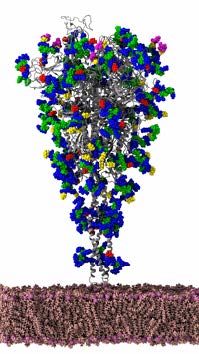

Rommie Amaro, professor of chemistry and biochemistry at the University of California, San Diego, is building a model of the exterior structure of the SARS-COV-2 coronavirus in order to support the development of highly targeted drugs for potential treatment. Amaro’s research requires massive amounts of computing power so she was given full access to Frontera, the most powerful academic supercomputer in existence, which is located at the Texas Advanced Computing Center at UT Austin.

Frontera’s system roughly equals the computing power of 100,000 desktop computers. Because of its power, Frontera is being used for a number of different COVID-19 research projects that are being facilitated by the COVID-19 HPC Consortium. For example, Ashok Srinivasan, a computer scientist at the University of West Florida, is using Frontera to study how to mitigate the risk of the virus spreading in constrained physical environments such as an airplane cabin.

Madhav Marathe, professor of computer science and biocomplexity at the University of Virginia’s Biocomplexity Institute, is leading a team of researchers across 14 U.S. institutions to harness the power of Big Data computing to plan for and respond to outbreaks such as COVID-19. Marathe’s team is using resources like Bridges to study how complex networks of human behavior affect the virus’ patterns of transmission. Their findings are assisting decision-makers at a number of institutions and health agencies to understand and manage the current epidemic as well as predict future outbreaks.

Q: What do you see as the next steps? What more can NSF do to further support our understanding of and response to the pandemic?

Parashar:

As the COVID-19 HPC Consortium is ramping up, we are seeing new gaps emerge in our support of COVID-19 research that need to be addressed. We’ve realized that there is a growing need for expertise and support services that can help scientists more effectively make use of high performance computing resources, from needing assistance with selecting and effectively using the right HPC resource to migrating application software to the resource and addressing security and privacy issues related to applications and data.

Even beyond supporting research directly related to COVID-19, we are seeing a similar need for such expertise and services from the broader research enterprise. In order to continue ongoing work in the face of the disruptions caused by the current pandemic, research organizations are moving data and services offsite and transitioning to remote operations. They are encountering the same challenges as COVID-19 researchers related to migrating applications and software as well as data security and privacy issues.

NSF is currently exploring how we can provide rapid-response services and expertise to the community to help address these near-term challenges. We hope that this assistance will enhance the work of the COVID-19 HPC Consortium and the fight against the global pandemic, as well as contribute to helping manage and maintain the continuity of the research enterprise during these uncertain times.

Because we are working to understand and respond to a pandemic that is global in its reach, we also see an opportunity to extend the COVID-19 HPC Consortium to include international partners. NSF-funded projects such as XSEDE already have such international partnerships, which I believe can be leveraged to help address this global challenge.

COVID-19 Resources: Coronavirus.gov; Coronavirus Disease 2019 (COVID-19); What the U.S. Government is Doing